Fairness Measures in AI Product Development

Fairness measures in AI product development ensure unbiased outcomes, ethical decision-making, and equal access, enhancing trust, compliance, and user satisfaction.

As large language models (LLMs) become integral to enterprise products, from recruitment tools to legal advisors and customer support bots, the demand for fairness in AI is no longer optional. It’s a core product requirement. Businesses today are under increasing pressure to ensure that their AI systems not only perform well but also operate ethically, transparently, and without bias.

Why? Because users are no longer passive consumers of AI outputs, they question results, spot inconsistencies, and challenge perceived unfairness. A single biased recommendation or discriminatory output can erode user trust, trigger regulatory concern, and damage brand reputation. With AI fairness now linked directly to product adoption and user retention, ignoring it is a business risk.

In this blog, we’ll unpack what purpose do fairness measures serve in AI product development, especially for teams building LLM-powered tools. We’ll cover how fairness intersects with accuracy, how it can be measured and enforced, and how product teams can bake it into the LLM development lifecycle, right from data collection to model evaluation.

Let’s explore how to build solid AI systems by design, not just after deployment.

Why Fairness Matters in AI Products

Fairness in AI isn’t optional; it’s foundational to trust, usability, and long-term success.

Overlooked Problem in Training Data

LLMs are trained on massive datasets, often scraped from the internet. While this scale enables broad language fluency, it also introduces harmful patterns, stereotypes, and institutional biases. If these aren’t addressed, the LLM will reflect and reinforce them, often subtly, sometimes dangerously.

What purpose do fairness measures serve in a product development? It’s essential to prevent these biases from shaping outcomes. Without them, users may receive skewed recommendations, misclassified inputs, or even discriminatory outputs based on race, gender, or other attributes.

Reputational, Legal, and Ethical Risk

Ignoring fairness isn’t just bad ethics; it’s bad business. In regulated sectors like finance or healthcare, biased LLM behavior could trigger compliance violations or lawsuits. In consumer applications, even a single incident can go viral, eroding trust. That’s why product teams must proactively define and monitor fairness criteria throughout the model lifecycle.

Marginalized Users Are Often Most Affected

Edge cases, non-dominant languages, underrepresented identities, or rare use patterns often fall outside a model’s performance curve. Yet these users are real, vocal, and critical to inclusivity. Fairness measures ensure your AI product doesn’t just work for the majority; it works equitably for all.

In short, what purpose do fairness measures serve in AI product development? They protect your users, your brand, and your bottom line.

Check out how we do it: LLM Product Development

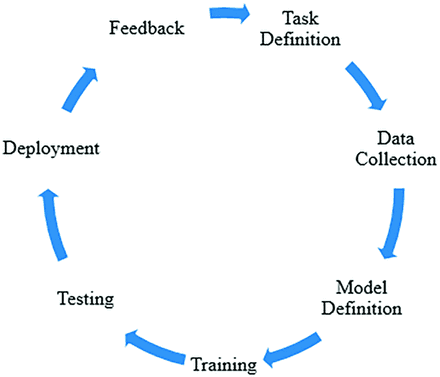

Where Fairness Fits in the AI Product Lifecycle

Fairness must be embedded from the start, not patched in after the product is live.

Too many teams treat fairness like an afterthought. However, it is crucial to prioritize fairness throughout the entire lifecycle of AI product development. Why? Because once a biased model is deployed, fixing it becomes exponentially harder, and reputational damage may already be done.

Source: American Society of Neuroradiology

Here’s how to build fairness into every stage:

Define Fairness Objectives Early

Before writing code or collecting data, product teams must ask:

- Who might be unfairly impacted?

- What outcomes are unacceptable?

- What fairness metrics should we track?

This clarity sets the tone for responsible development.

Audit for Representation and Bias

Biased data leads to biased outputs. Use techniques like:

- Stratified sampling across demographics

- Exclusion checks for offensive or skewed language

- Augmentation for underrepresented groups

Ensure your training set reflects the diversity of real-world users.

Use Fairness-Aware Learning Techniques

Integrate techniques such as:

- Reweighing

- Adversarial debiasing

- Constraint-based optimization

These approaches actively reduce unfair patterns during model learning.

Test for Disparities, Not Just Accuracy

Don’t stop at performance benchmarks. Evaluate across subgroups:

- False positives by gender or race

- Token bias in prompt completion

- Edge case behaviour

Track fairness metrics alongside accuracy, latency, and cost.

Monitor Fairness in the Real World

Post-launch monitoring is critical. Real users surface new edge cases. Use real-time fairness dashboards, feedback loops, and human-in-the-loop validation to maintain ethical performance.

When asked, “what purpose do fairness measures serve in AI product development?” The answer is clear: they must guide every phase of the journey.

Types of Fairness Measures in AI

Various AI use cases necessitate distinct fairness strategies; there is no universally applicable metric.

Statistical Parity

Statistical parity ensures that outcomes (like approvals or recommendations) are distributed similarly across demographic groups. For instance, if an AI system recommends loans, statistical parity would require that different races or genders have similar approval rates, irrespective of their credit history or income.

While it sounds ideal, enforcing strict statistical parity can sometimes override legitimate signal differences in the data. That’s why it must be applied carefully, especially in high-stakes contexts.

Equal Opportunity

This measure focuses on whether qualified individuals from different groups have the same chance of being correctly selected. For example, in a hiring model, do qualified women and men get shortlisted at the same rate?

Equal opportunity preserves merit-based selection while ensuring fair access to opportunity, making it a favored measure in regulated sectors like HR and healthcare.

Demographic Parity

Demographic parity targets equal representation across groups in the model’s output. It’s especially relevant in recommendation systems (e.g., job platforms, streaming services), where visibility can impact outcomes.

This feature ensures that exposure isn’t skewed by historic imbalances in training data.

So, what purpose do fairness measures serve in AI product development? They help product teams quantify, correct, and control algorithmic bias before it harms users or the business.

Fairness isn’t a one-time fix; it’s a product discipline that must span the full AI development lifecycle. Explore the LLM Development Life Cycle to see how fairness fits into every phase.

Technical & Product Trade-Offs

Striking a balance between fairness and performance is a core challenge in AI product development.

Fairness vs. Accuracy

Product teams often worry that increasing fairness will reduce a model’s predictive accuracy. But the reality is more nuanced. Accuracy, by default, tends to favor the majority class. Fairness ensures that minority or edge-case users aren’t systematically misclassified or ignored.

Sometimes, optimizing for both is possible, with trade-offs in model complexity or training cost. The key is to frame fairness not as an accuracy reducer, but as a quality enhancer for all users. That’s especially true when deploying models in regulated industries where fairness failures can carry steep legal penalties.

To navigate this, you need to think of what purpose do fairness measures serve in AI product development and integrate fairness metrics at every checkpoint. A dedicated company that develops large language models can help operationalize this.

Fairness vs. Personalization

Hyper-personalized AI experiences often rely on behavioral data, which can reinforce existing biases. For example, if an AI recommendation engine gives fewer opportunities to marginalized users based on “past engagement,” it’s perpetuating inequality under the guise of relevance.

This is where product managers must ask:

- Are we optimizing for individual satisfaction or equitable access?

- Is personalization masking systemic bias?

It’s not about eliminating personalization; it’s about designing AI products that treat fairness as a constraint, not an afterthought.

When building enterprise-grade LLM apps, these trade-offs should be addressed early, with engineering and product teams aligned on acceptable thresholds.

Building Fairness Into Your Product Development Process

Fairness requires a repeatable, testable, and scalable process.

Data Auditing

Bias often starts with the dataset. Conduct comprehensive audits to identify skewed representation, exclusion of minorities, or over-indexing on dominant voices. Techniques include:

- Demographic distribution checks

- Toxicity and stereotype filters

- Balanced sampling or data augmentation

The goal is not perfect parity but to avoid reinforcing existing social and systemic biases.

Integrate Algorithmic Audits

We must continuously validate the model's behavior beyond data. Algorithmic audits include:

- Disaggregated performance metrics across user segments

- False positive/negative analysis

- Attention layer tracking for interpretability

What purpose do fairness measures serve in AI product development? The AI engineers team can tune prompts, reweight examples, or rearchitect components to mitigate bias.

Human-in-the-Loop (HITL) Validation

Automated metrics are not enough. Real users and subject-matter experts must be embedded in the testing and evaluation loop. Use HITL pipelines to:

- Flag unintended behavior early

- Provide edge-case examples for retraining

- Score model outputs for social sensitivity

How Muoro Helps Operationalize Fairness

At Muoro, we help organizations implement fairness by design, not after the fact. As an LLM application development company, our teams specialize in:

- Building evaluation pipelines with fairness metrics

- Integrating bias detection tools into CI/CD

- Ensuring alignment with privacy, DEI, and compliance goals

Whether you need support in building an ethical AI app or auditing your existing GenAI stack, our LLM product development expertise ensures fairness is part of your product DNA. And that’s how we ensure what purpose do fairness measures serve in AI product development.

Final Thoughts: Fairness Is a Feature, Not an Afterthought

Integrating fairness into AI product development is no longer optional; it’s essential for user trust, brand integrity, and long-term scalability. From training data to evaluation loops, every decision made during finding out what purpose do fairness measures serve in AI product development impacts how inclusive and responsible your product will be.

At Muoro, we believe fairness isn’t just a compliance goal; it’s a competitive edge. As a company specializing in the development of large language models, we assist you in designing ethical, high-performing AI systems that are effective for all users.

Whether you're refining an existing model or building a GenAI product from scratch, our team ensures fairness is embedded throughout the LLM development life cycle.

Building LLMs or GenAI tools? Let’s make them fair, useful, and scalable.

FAQs

What purpose do fairness measures serve in a product development?

Fairness measures ensure that products serve all users equitably, reduce bias, build trust, and prevent unintended discrimination across diverse user groups and edge-case scenarios.

What purpose do fairness measures in AI product development?

In AI product development, fairness measures mitigate algorithmic bias, promote ethical outcomes, and ensure compliance, especially when deploying models that influence decisions in finance, healthcare, hiring, or public services.

What purpose do fairness measures serve in AI product development?

They help deliver unbiased, trustworthy, and inclusive AI experiences by addressing dataset imbalances, monitoring model behavior, and improving outcomes for marginalized or underrepresented users across different contexts.

Why are LLM responses often accurate relevant and well-rounded?

LLMs are trained on diverse, large-scale datasets and optimized with feedback loops, enabling them to generate contextually rich, logically coherent, and human-like responses across a wide range of topics.

What is one challenge in ensuring fairness in generative AI?

A major challenge is biased training data, which can produce outputs that unintentionally reinforce stereotypes, marginalize users, or exclude underrepresented perspectives during content generation.

What does the principle of fairness in GenAI entail?

It means designing systems that produce equitable outputs across demographics, avoid harmful bias, and uphold ethical standards—through careful data curation, algorithmic audits, and inclusive evaluation practices.